I wrote this challenge for the first ever osu!gaming CTF. I wanted a cool adversarial ML challenge with osu! theming, which led me to writing a classifier to detect anime girls in osu! map background images.

We are provided model.pt storing model weights, server.py, and the background of Hitorigoto.

#!/usr/local/bin/python

import base64

import sys

import numpy as np

from PIL import Image

import torch

import torch.nn as nn

import torchvision.transforms as T

from torchvision.models import resnet18

model = resnet18()

model.fc = nn.Linear(model.fc.in_features, 1)

model.load_state_dict(torch.load("model.pt"))

resize = T.Resize((224, 224), antialias=None)

to_tensor = T.ToTensor()

normalize = T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

original = np.array(Image.open("hitorigoto.png"))

inp = input(f"Enter a base64 encoded image.\n")

try:

img = np.frombuffer(base64.standard_b64decode(inp), dtype=np.uint8)

img = img.reshape(original.shape)

assert np.max(np.abs(original.astype(np.int32) - img.astype(np.int32))) <= 8

except:

print("That doesn't look like hitorigoto!")

sys.exit(0)

image = Image.fromarray(img)

x = normalize(resize(to_tensor(image)).unsqueeze(0))

with torch.no_grad():

y = torch.sigmoid(model(x))

if y < 0.5:

print("That's obviously an anime girl.")

sys.exit(0)

elif y < 0.825:

print("I'm not fully convinced that's not an anime girl.")

sys.exit(0)

with open("flag.txt") as f:

print("Surely that's not an anime girl.")

print(f.read())

Based on the server code, we are given a ResNet model with the final layer modified to have just one neuron output, acting as a binary image classifier. We are asked to submit an image which satisfies two constraints: the \(L_\infty\) norm of the difference of the original image and our image \(|original - img|_\infty\leq8\); and the sigmoid of the model's output on our transformed image must be at least 0.825, indicating that the model is quite sure that the image is not an anime girl.

FGSM

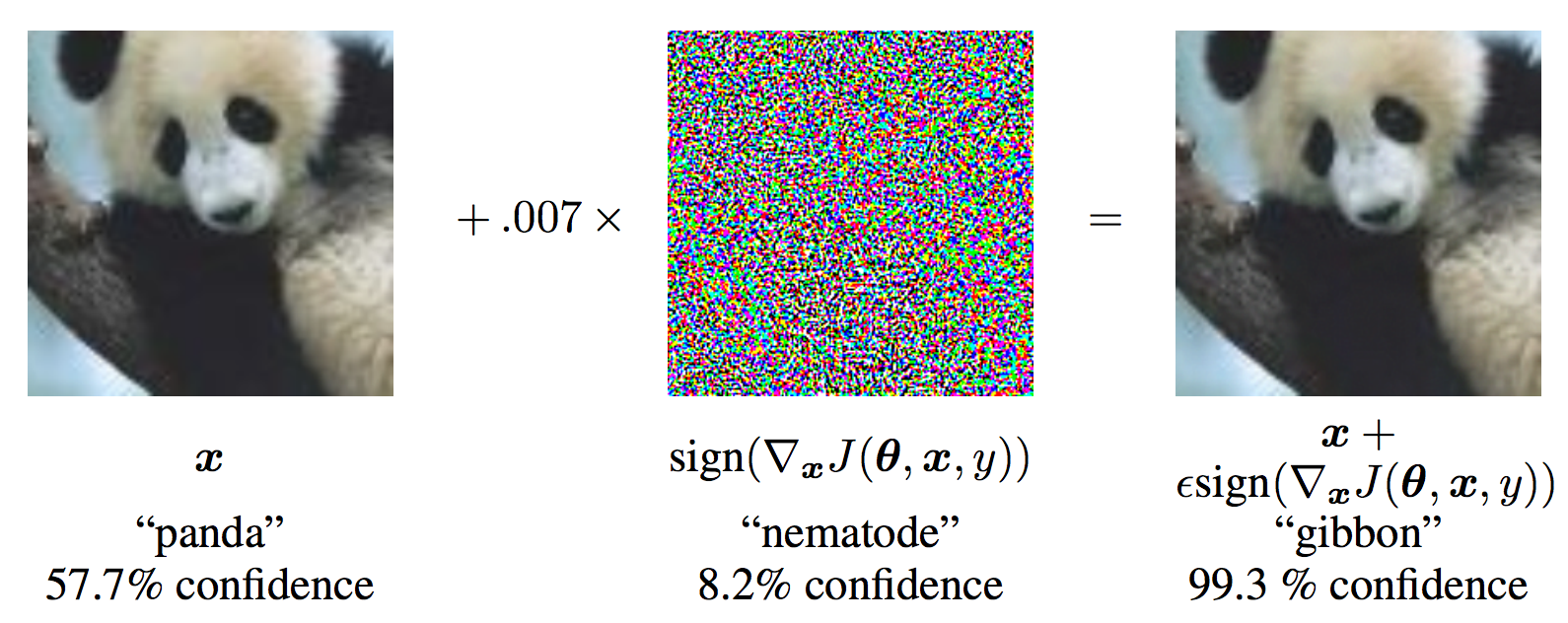

We need to generate an adversarial example for our model. There are many gradient-based methods for doing this, mostly centered around the Fast Gradient Sign Method (FGSM). The idea with FGSM is you have some input which you want to slightly perturb to cause a target network to make a misclassification.

In the above image, we have the input image \(\bm{x}\), which we slightly perturb by adding \(\epsilon\times\text{sign}(\nabla_{\bm{x}}J(\bm{\theta},\bm{x},y))\), where \(\epsilon\) is our maximum perturbation, \(J\) is the loss function (measures error), \(\bm{\theta}\) is our model parameters, and thus \(\nabla_{\bm{x}}J(\bm{\theta},\bm{x},y)\) represents taking the gradient of our loss with respect to our input. So the attack is just to add some max perturbation factor times the sign of the gradient of our loss (where sign means all elements collapse to either -1, 0, or 1 depending on their sign). This is effectively attempting to maximize the loss between the input image and the given target image, making the model "wrong" about its prediction. This is called an untargeted attack.

You can instead choose to subtract \(\epsilon\times\text{sign}(\nabla_{\bm{x}}J(\bm{\theta},\bm{x},y))\) to minimize loss and obtain an input that generates some desired output. This is called a targeted attack. In the case of a binary classifier, both methods are basically the same though.

One nice property of FGSM is that since taking the sign yields bounding in \([-1,1]\), the overall perturbation is bounded in \([-\epsilon,\epsilon]\) and thus satisfies \(L_\infty\leq\epsilon\), which is desirable for this challenge as we have the \(L_\infty\) constraint \(|original-img|_\infty\leq8\).

There are improvements to FGSM such as I-FGSM which just does multiple iterations of FGSM, adding an \(\alpha\) term to act as the max perturbation for each iteration, with \(\epsilon\) being used to clip output in the range \([original-\epsilon, original+\epsilon]\), still acting as the overall max perturbation. MI-FGSM adds the use of momentum on top of I-FGSM, speeding up convergence. I will choose to use MI-FGSM, though this challenge can be solved without momentum; it would just take more iterations.

Now, before we implement our solve, there are some important observations to make.

Resizing

For one, the server expects an image of the same size as our original image, which means that we can't just resize our image at the beginning and perform our attack on the resized image (or perhaps we could, but this would require inverting the bilinear interpolation of the resize at the end, which is either a total pain or completely impossible). We need gradients of the same shape as our input image, meaning we will have to resize every iteration and include it in the gradient computation.

Loss

Speaking of loss, we need to choose a loss function for our computation. Since this model is a classifier, binary cross-entropy loss is a good choice. We will choose to use torch.nn.BCEWithLogitsLoss, which applies sigmoid to the output logits (to convert the output into the interval \([0,1]\)) and then computes binary cross-entropy loss.

Normalization

Additionally, we should account for the normalization of our input. I found applying normalization at every iteration before requiring gradient computation yielded the fastest convergence, though it's difficult to determine exactly why.

For normalization, the operation per channel \(c\) can be represented as

$$ \bm{x}_c'=\frac{\bm{x}_c-\mu_c}{\sigma_c} $$

with mean \(\mu\) and standard deviation \(\sigma\).

If we represent our computed gradient in terms of the operations \(\bm{f}_m\) (model), \(\bm{f}_r\) (resize), and \(\bm{f}_n\) (normalization), we have

$$ \nabla_{\bm{x}}\bm{f}_m(\bm{f}_r(\bm{f}_n(\bm{x})))=\frac{\partial\bm{f}_m}{\partial\bm{f}_r\bm{f}_n(\bm{x})}\frac{\partial\bm{f}_r}{\partial\bm{f}_n(\bm{x})}\frac{\partial\bm{f}_n}{\partial\bm{x}} $$

But \(\frac{\partial\bm{f}_n}{\partial\bm{x}}=\frac{1}{\bm{\sigma}}\), so the difference between including and not including the normalization in the gradient computation is just a constant scalar factor. Perhaps this constant factor ends up being harmful to convergence over many iterations of FGSM. In any case, we should still perform the normalization on our input at every iteration so it's accounted for in our loss. Otherwise our generated image may not perform well after normalization on the server.

Image Conversion

And finally, when we send our image to the server we need to convert to \([0,255]\) pixel format, which will change our generated image slightly, changing our model output. To account for this, we perform this calculation every iteration to obtain the server prediction, allowing us to know when we're done optimizing.

Solve

Now we can implement the solve script below.

import base64

import subprocess

import numpy as np

from PIL import Image

import torch

import torch.nn as nn

import torchvision.transforms as T

from torchvision.models import resnet18

from pwn import *

model = resnet18()

model.fc = nn.Linear(model.fc.in_features, 1)

model.load_state_dict(torch.load("model.pt"))

resize = T.Resize((224, 224), antialias=None)

to_tensor = T.ToTensor()

normalize = T.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

original = to_tensor(Image.open("hitorigoto.png")).unsqueeze(0)

adversarial = original.clone()

# define epsilon as 8 pixels in [0, 255], or 8/255 in [0, 1]

epsilon = 8 / 255

alpha = 4 / 255

criterion = nn.BCEWithLogitsLoss()

momentum = torch.zeros_like(adversarial)

server_pred = 0

i = 0

while server_pred < 0.825:

# don't include normalization in gradient computation

normalized = normalize(adversarial).requires_grad_()

y = model(resize(normalized))

# targeted attack with desired output 1

loss = criterion(y, torch.ones(1, 1))

loss.backward()

# normalize gradient for momentum calculation

grad = normalized.grad / torch.mean(

torch.abs(normalized.grad), dim=(1, 2, 3), keepdim=True

)

# apply and update momentum

grad += momentum

momentum = grad

# update image

adversarial -= alpha * grad.sign()

adversarial.clamp_(0, 1)

adversarial.clamp_((original - epsilon + 1e-3), (original + epsilon - 1e-3))

server_pred = torch.sigmoid(

model(normalize(resize((adversarial * 255).floor() / 255)))

).item()

if i % 50 == 0:

print(f"Server Prediction: {server_pred}")

i += 1

print(f"Server Prediction: {server_pred}")

print(f"Total iterations: {i}")

# save and encode our image

adversarial_np = (adversarial.squeeze().permute(1, 2, 0).numpy() * 255).astype(np.uint8)

Image.fromarray(adversarial_np).save("adversarial.png")

encoded = base64.standard_b64encode(

np.ascontiguousarray(adversarial_np)

).decode()

# send base64 image to server and get flag

c = remote("chal.osugaming.lol", 7274)

c.recvline()

pow_cmd = c.recvline()

pow_out, _ = subprocess.Popen(pow_cmd, shell=True, stdout=subprocess.PIPE).communicate()

c.send(pow_out)

c.recvline()

c.sendline(encoded)

c.interactive()

This script obtains a server prediction of ~0.8267 in 940 iterations (or about 1 minute on my system), and outputs the following image:

And we get the flag: osu{anime_girls_are_a_plague}